Epic Everyday immerses the user in a macroscopic sea of sound and visuals, allowing the user to inhabit the less than ordinary experience. The user will be able to engage with different channels throughout the experience. By inhabiting everyday objects, users can experience what is usually ignored thus challenging our preconceptions of what is important, and highlighting the oddity of inhabiting ignored spaces.

We are finding the sublime in the most ordinary of places.

We are finding the sublime in the most ordinary of places.

Four week student project.

Collaboration with: Claire Le Noble & Jason Wong Early sketches, collaboration with: Jason Wong & Yeawon Kim

Specification: VR, IxD, 3d modeling, prototyping, programming

Execution: Autodesk Remake, SteamVR, HTC Vive, Unity, C#, JavaScript, Blender, After Effects, Photoshop

Collaboration with: Claire Le Noble & Jason Wong Early sketches, collaboration with: Jason Wong & Yeawon Kim

Specification: VR, IxD, 3d modeling, prototyping, programming

Execution: Autodesk Remake, SteamVR, HTC Vive, Unity, C#, JavaScript, Blender, After Effects, Photoshop

// Context

Everyday, mundane objects are often overlooked. We don’t consider the experiential qualities of ubiquitous objects that are present in our daily lives. In Epic Everyday, virtual reality is utilized to transform how mundane objects are experienced. The installation presents video footage and the soundscape from a household environment. Once immersed in the virtual environment, the user experiences a heightened perspective of everyday objects through a dramatic shift in scale, and a magnified soundscape. Although this Epic Everyday is constructed as a computer simulation, we propose that this would be a real space in a person’s home, and that this content would be broadcasted like a webcam. These cameras would be placed with intent by voluntary users to capture ordinary experiences in a distinct way. By focusing on ordinary objects we can subvert what traditional film and tv have allowed viewers to do, escape from reality. Epic Everyday allows the viewer to escape their own reality by being further immersed in the everyday. The specific objects that we have chosen for the experiences are things that we interact with everyday. We disregard these objects and by highlighting their value in the Virtual Reality space we can create experiences that show how the mundane can be compelling.

// Process

I started this project with an interest in situating the viewer in a microscopic VR space. To me this is something that in the real world is rarely possible, but recently I had the chance to experience it, well sort of. I had just gotten back from visiting Death Valley National Park, which in many ways situates you in many vast spaces, and makes you realize your scale in the world. This micro/macro feeling was really interesting in this unique and extreme environment. Ths became a focus on wanted to explore in my piece, and seeing that the first part of the module was learning how to utilize photogrammetry to recreate an object into a 3d mesh with texture, I decided to find a way to scan an object in a way that allowed for that experience to occur. After a bit of experimenting I decided to work with a museum grade replica saber tooth cat skull that I have at home. (yes i have a museum grade replica saber tooth cat skull)

I specifically wanted to utilize the inner jaw of the the skull as an environment for the user to be immersed in, while still allowing them to explore. This proved to be a much more difficult task than I first imagined it to be. Getting detailed images of the inside of the mouth was hard enough. There's only so much room between the jaws (I wanted them closed), and it’s way too small for a camera. This challenge is compounded by the fact that you cannot use lighting that creates shadows, or change the position of the object throughout taking photos. After several failed attempts I finally reached out to my girlfriend for help, (she has used photogrammetry in her work before) and was able to get a very nice scan.

An initial photogrammetry scan for the project (above)

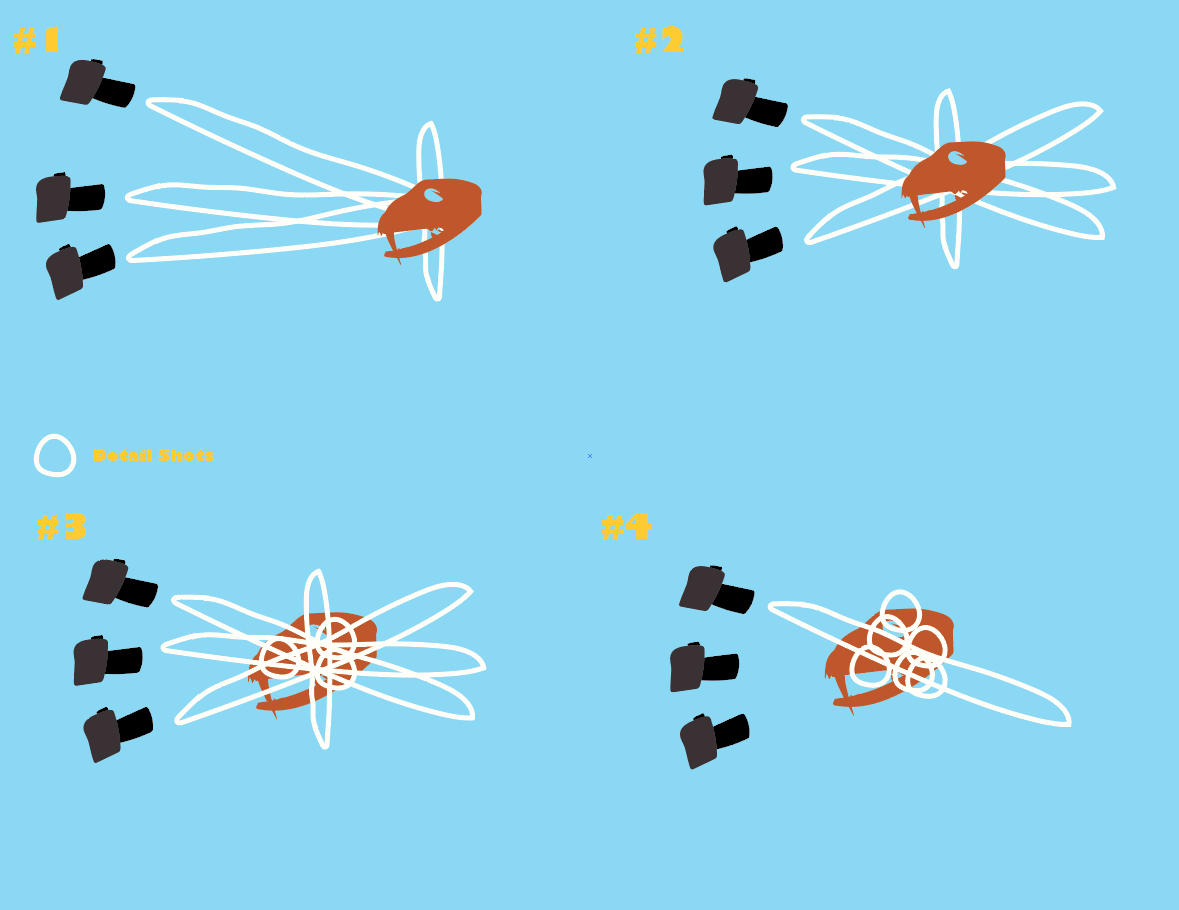

Around this point I began working with Jason and Yeawon. We wanted to bring all three of our scans together to further explore this aspect of the microscopic user. We began using multiples of each of scans to create a spherical world that the user could explore, but more specifically one that allowed them to view each individual scan in a much larger detail than its original, and collectively as a surreal environment. This led to us questioning how we could really bring this heightened aspect of sense to the next level. After more exploration we landed on the idea of creating feeling stations, where the user could actively feel the tactile sensation of the general idea of several selected vantage points. Below is the experience we created.

Early VR experience exploring virtual and physical interactions.

(above)

After that sketch, Jason and I started working with Claire on developing a new iteration of the piece. We were still interested in the macro/micro aspect of the previous sketch. We also became interested in how we could maximize the small platform that the vive had for the user to walk around. More specifically we were intrigued by the idea of using the VR environment to make small location much bigger. In addition we wanted to think of a way to make VR something that reduced stimulation rather than, adding it like most VR experiences. And we all were interested in how we could creatively implement sound into a VR experience. Most of the games we had played/experienced took a very literal take on sound, and really only used it as if it was a traditional video game. At this point we knew what interactions we wanted to explore, but as a group got stuck in a circle of disagreement of what the project was about or how we should frame it.

After a few days of discussion we landed on the idea of creating an experience that would allow the user to explore the livelihood of everyday objects. Through research we found a relatively new online trend, where people host youtube channels showing certain aspects of life. Two examples we really gravitated to were forestry departments hosting animal cams, like the eagle cam, or people (specifically in South Korea) that post videos of them eating. We started to question what the future of these, already odd and TV like, youtube channels would be. More specifically how would they be situated in an everyday VR experience. We started to think about how the internet of things would probably be part of this experience, which in a semi related note, led us to the idea of being able to tune into being an object in a house.

// Critical Reflection

This project had many ups and downs, and I really wish I made different decisions, but in the end it taught me a lot. One of the biggest things I think was made clear from this piece was that making some sort of sketch from the start of a project is absolutely crucial. This is even more true if you don’t know what you are trying to focus on as a concept. We essentially wasted several days, in what was already a small timeframe project, not making and just searching for a “perfect” concept. Although, in the end I feel like we made a successful piece, I feel like it would have been that much stronger if started making and sketching from day one. The second thing that was made clear through working on this piece is some people just don't work well together. This happened for many reasons: different working styles, different interests, different ways of communicating, …

I think the project itself made doing sketching and prototyping hard because it’s in Unity and we were designing for an immersive environment. But i think this challenge really underlined the necessity for this process.

In future iterations I think it would be interesting to really work with live images, or 3d videos. If I were to go that route I would want to composite out the video creating mesh for things like the fridge, tables, and well anything that would sell the depth of the environment. I think the “stock” unity texture and feel really was a detractor from the user actually feeling the full effect of being a bag, lightbulb, or cup.

One main focus that I would like to elaborate on in the future is interaction and experience we captured with the relationship between the user and the ice cubes. That experience really seemed to transcend the user into a moment where they felt frozen and forgot that they were in a headset with headphones. I think bringing that experience to each of the other scenes would be great, and I would like to further explore other experiences. I think each of the three, and possible new, experiences could be their own pieces. This focus would really allow us to focus on how to really refine and build a truly captivating immersive experience.

// Questions

Can virtual reality be used to elevate a banal experience into something compelling?

Currently, VR is usually human-centric in regard to scale and point of view, but what happens

when we shift this perspective to that of an object’s scale?

What new soundscape do we become immersed in?

How does our understanding of a common household space change after this experience?

// Inspirations

The classic European tradition of still life paintings has commonly depicted everyday objects such as food or ceramics. These are objects that either signify material pleasures or reflect the the time and culture of the period. The still life has often been used for formal technical experiments in modern art.

Currently, VR is usually human-centric in regard to scale and point of view, but what happens

when we shift this perspective to that of an object’s scale?

What new soundscape do we become immersed in?

How does our understanding of a common household space change after this experience?

// Inspirations

The classic European tradition of still life paintings has commonly depicted everyday objects such as food or ceramics. These are objects that either signify material pleasures or reflect the the time and culture of the period. The still life has often been used for formal technical experiments in modern art.

Basket Of Fruit

Caravaggio

1596

Still Life with a Beer Mug

Fernand Léger

1921–1922

4' 33" is a composition with no note written by John Cage, in which the a perfomer is silent. In the piece a performer enters the stage and doesn’t play an instrument for the entire piece. Cage illustrates that even in perceived silence, sound and noise are ubiquitous.

4' 33"

John Cage

1952

Can the traditionally passive view of a still life become something different in another medium?

Virtual Reality affords the opportunity to be thoroughly immersed in new environments, this can be exploited to put a new lens on spaces that haven’t been previously experienced experienced. No longer is it necessary to passively view a painting, users can experience the still life as inhabiting the space.

We adopted elements of Cage’s artistic intent and applied it to the still life in Virtual Reality. If we reduce our everyday experiences to the most mundane spaces can they reveal something that we haven’t previously considered? What is revealed when viewer’s focus is shifted from external consumption to internal discovery of banal spaces?

// Brief

Assignment/Layer 1: Scan

VR and AR uses information from the real world to construct the virtual. The site, and everything within it, forms the foundation of the experience. How are VR and AR sensors and cameras, seeing, understanding, inferring, and translating the physical space and context into the virtual?

Assignment/Layer 2: Embody

Virtual and augmented reality are not real. But at the same time it is a real experience brought to life through the virtual camera and head mounted display. How does this technology enable us to see, move, interact, and construct a new reality? How do you make someone feel immersed? How does this immersive view of the immaterial influence our perception, alter our spatial senses, and control our behavior - how we move our bodies in both the virtual and physical space?

Assignment/Layer 3: Interact

How might the virtual have influence over the physical world – our spaces, objects, bodies, and minds? How might it intervene, inform, or affect? What new hybrid interactions, aesthetics, objects, or places will emerge in the material world? How might the virtual hide, integrate, acknowledge its connection to the physical infrastructure - the computer tower, display, head mounted display, office chairs, and other props? Over time, how will it affect our perceived boundary between the real and virtual?

Final Project: Everyday Immersions

Virtual Reality (VR) and Augmented Reality (AR) are an expanding array of immersive technologies — hardware, software, spatial sensors, cameras, interfaces, controllers, and head mounted displays (HMD) that read and interpret the real world, combine it with the virtual, and in the process, blur the boundary in-between. Through this complex layering of the real and virtual, the tangible and intangible, the person, their body, their brain, and their way of perceiving is placed in the middle, somewhere new. They are in-between realities, in-between places, and in-between their real self and their virtual representation. Welcome to immersive living, a new type of “reality”. As VR and AR technologies become increasingly ubiquitous and cultural acceptance grows how might these technologies begin to permeate through our world, making immersive experiences more routine – like putting on a watch or diving in for a 'special' occasion? How will these augmented layers of sensing technologies and media begin to shape the material surfaces of our places, things, and bodies? What new strange juxtapositions of views, aesthetics, and interactions will be created from this layering, mixing, reflecting and collaging of experiences? How do they interact, inform, and affect one another? What are the implications of this shifting boundary? Will these seamed experiences and resolutions assimilate, mingle, or interrupt? How will these emerging technologies redefine our everyday experiences? Outside of a few specific scenarios, there is not one singular vision of how VR or AR will ultimately fit (or not) within our pre-existing rhythms, rituals, and routines. Through a series of investigative assignments [wk1-2], we will explore the layering of the real and the virtual through the expansive VR and AR technological stack. We will use hands-on experimentation to push beyond stereotypical tropes and demo content, to uncover the strange and overlooked details in the in-betweens.

All works © Michael Milano 2010-2019. Please do not reproduce without the expressed written consent of Michael Milano.